Exploring the field

Satellite photogrammetry in a nutshell

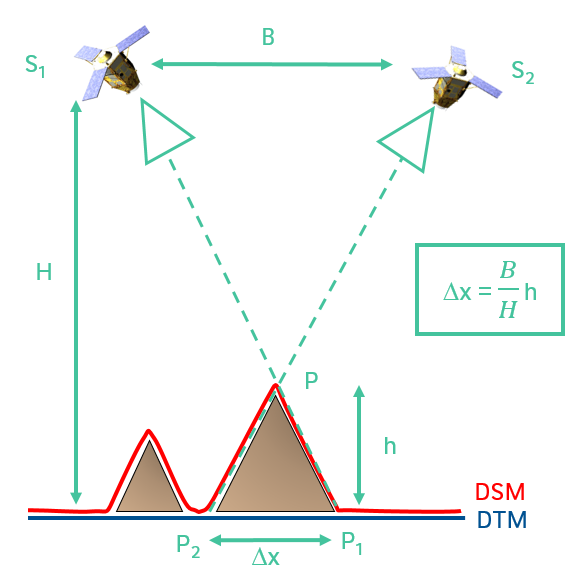

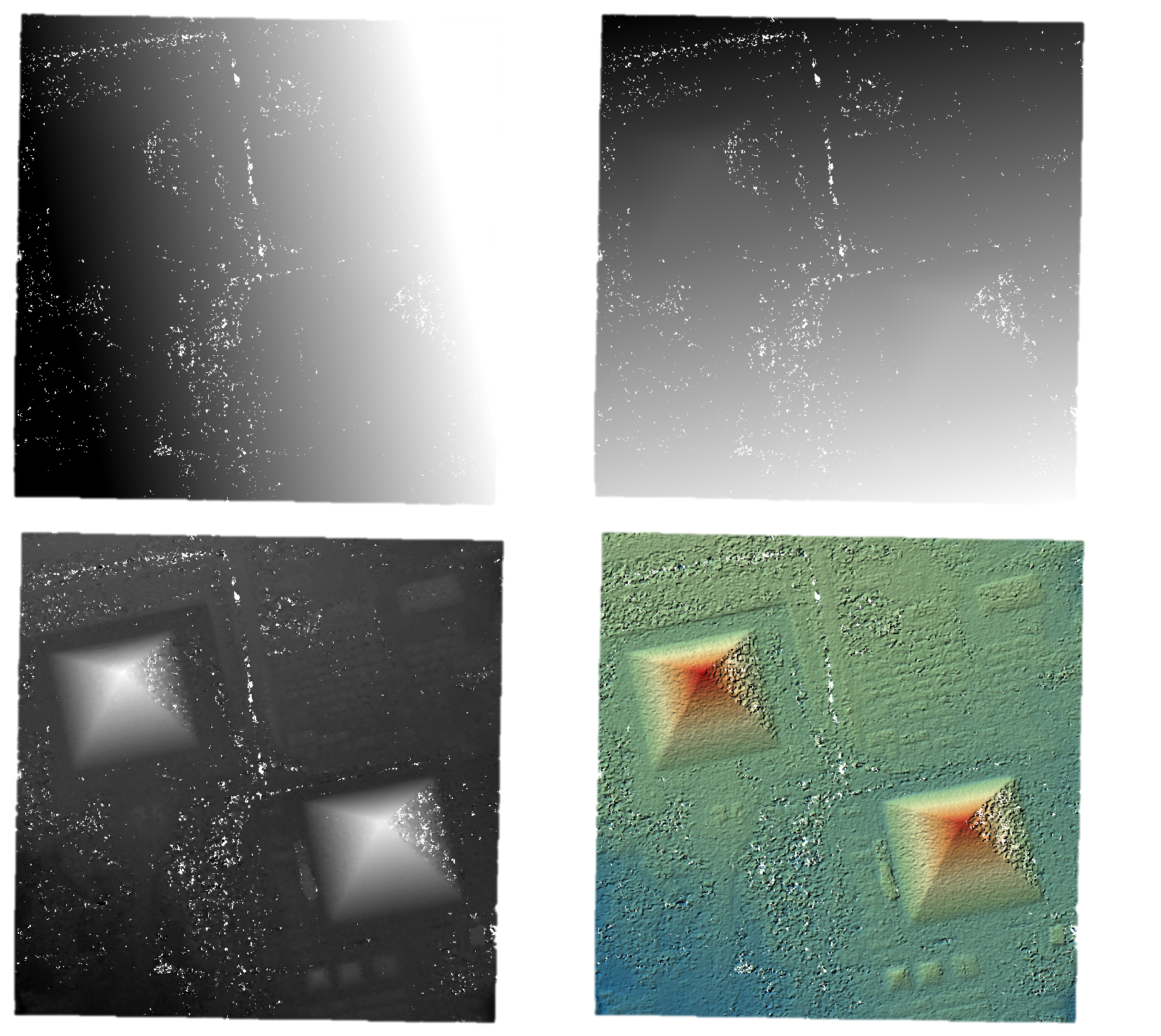

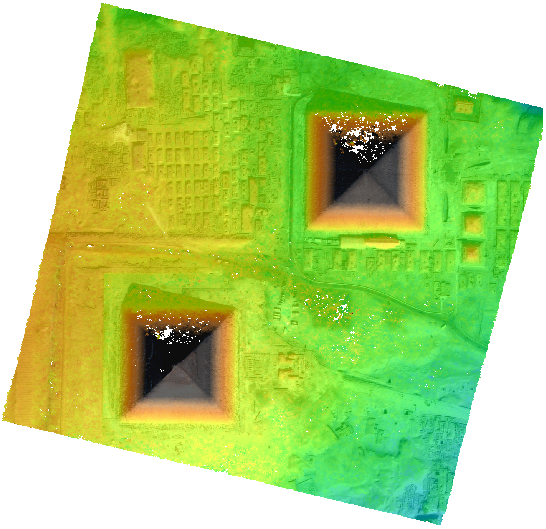

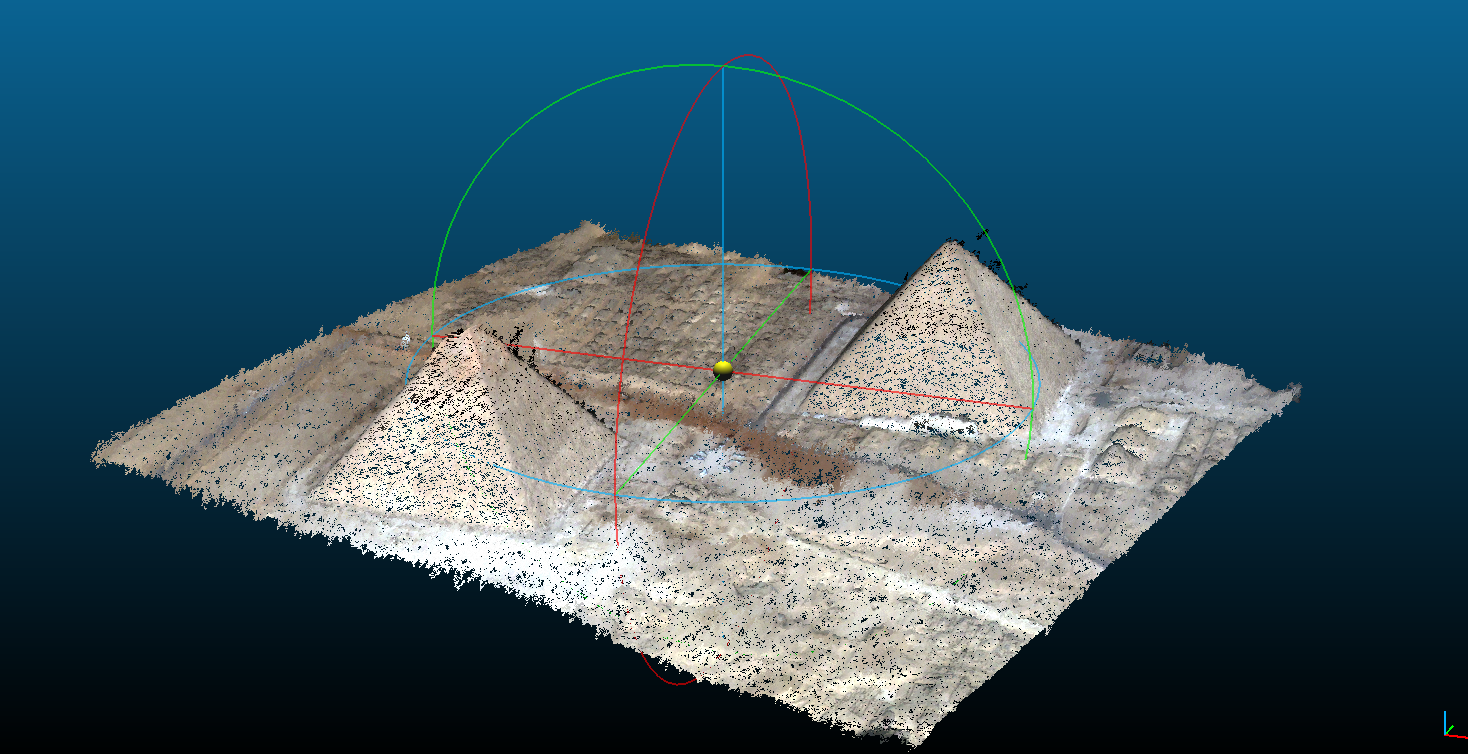

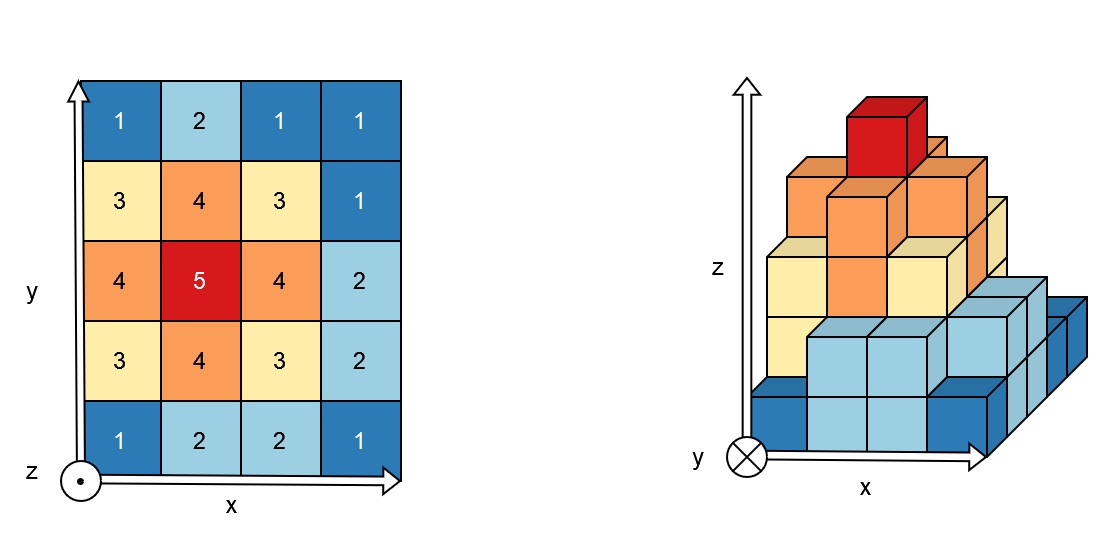

As its name suggests, satellite photogrammetry works using optical satellite acquisitions to generate a Digital Surface Model, the 2.5D representation created from observed surface altitude data. We speak of 2.5D because the digital surface model is an array of pixels (raster, 2D) where each pixel (x,y) corresponds to an altitude (z).

Fig. 1 2.5D representation

On the left, an example of an array of pixels, and on the right, a volume representation of altitude values.

Like our eyes, altitude (or depth relative to the satellite, to continue the analogy) is determined from the observed pixels displacement. We therefore need at least two images, acquired from two different viewpoints. This difference in viewpoint between two satellites is quantified by the B over H ratio where B is the distance between the two satellites and H is their altitude.

B over H ratio |

Two viewpoints |

Every raster GDAL knows how to read can be given as CARS input. In addition to images, the photogrammetric process requires geometric models. Rational Polynomial Coefficients (RPCs) provide a compact representation of a ground-to-image geometry giving a relationship between:

Image coordinates + altitude and ground coordinates (direct model: image to ground)

Ground coordinates + altitude and image coordinates (inverse model: ground to image)

These coefficients are classically contained in the RPC*XML files.

From Satellite Images to Digital Surface Model

Generate a DSM step by step

Pipeline |

Resampling |

|

Pipeline |

Matching |

|

Pipeline |

Triangulation |

|

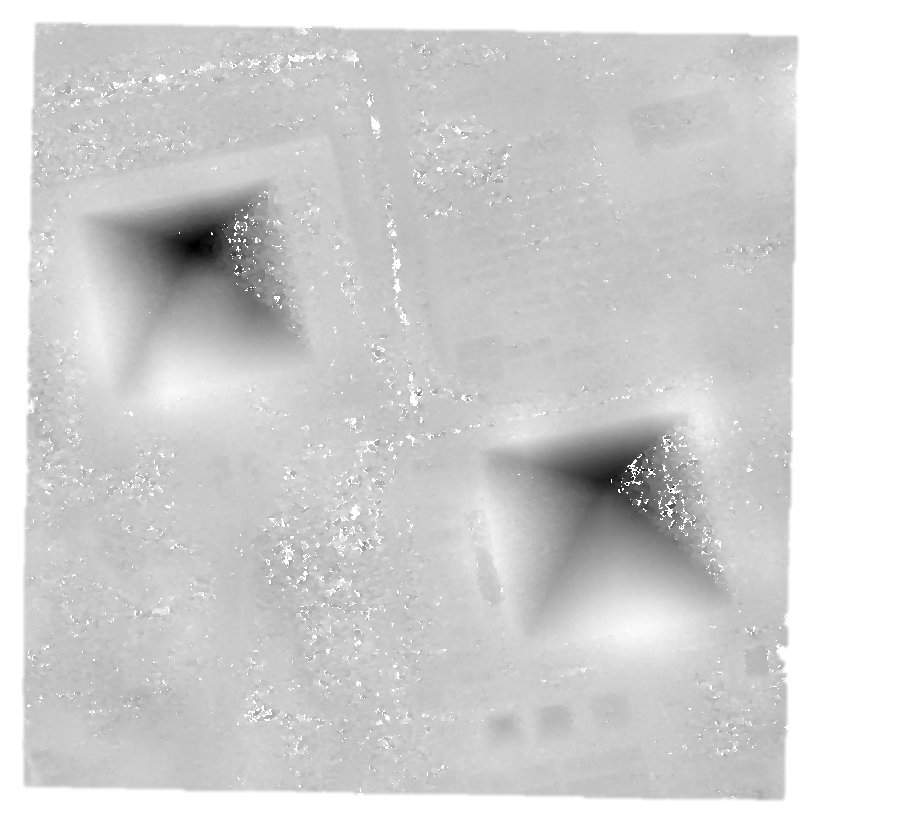

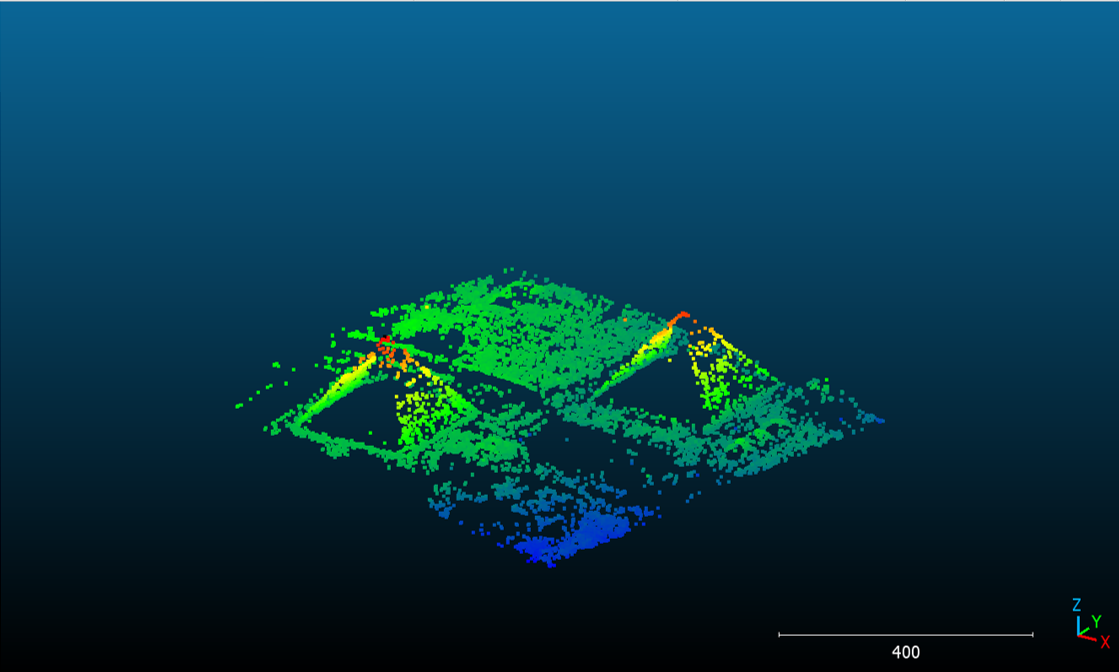

To obtain a raster image, the final process projects each point into a 2D grid: altitudes and colors (see below).

Pipeline |

Rasterization |

Initial Input Digital Elevation Model

For now, CARS uses an initial input Digital Elevation Model (DEM) which is integrated in the stereo-rectification to minimize the disparity intervals to explore. Any geotiff file can be used.

For example, the SRTM data corresponding to the processed zone can be used through otbcli_DownloadSRTMTiles.

The parameter is initial_elevation as seen in Configuration.

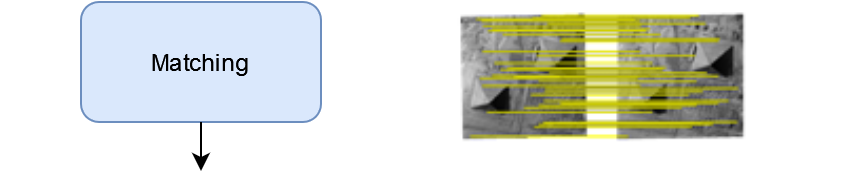

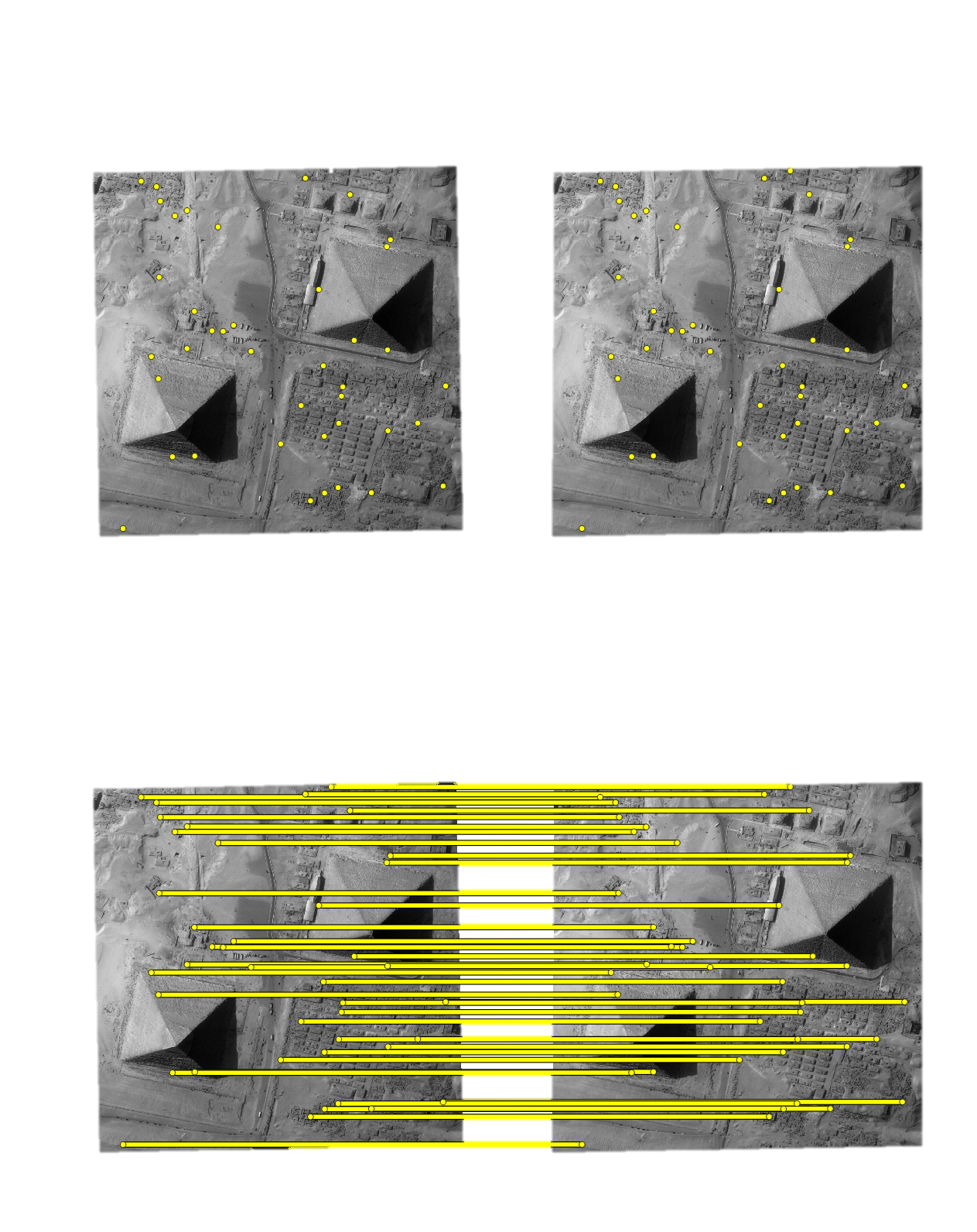

Altimetric exploration and geometric inaccuracies

To reduce the search interval (i.e. altimetric exploration) in the matching step and thus save computing time, a faster sparse matching step is typically used. This matching step also enables geometric errors to be corrected, thus ensuring that the epipolar geometry (based on these models) is correct.

Matching can be performed with keypoints like SIFT.

Pipeline |

Matching (sparse) |

|

The result is a sparse point cloud…

Pipeline |

Triangulation (sparse) |

|

and a sparse digital surface model.

Pipeline |

Rasterization (sparse) |

|

Mask and Classification Usage

Masks

Classification

classification parameter Configuration..3D products

dsm.tif that contains the Digital Surface Model in the required cartographic projection and the ground sampling distance defined by the user.clr.tif is also produced. The latter is stackable to the DSM (See Getting Started).These two products can be visualized with QGIS for example.

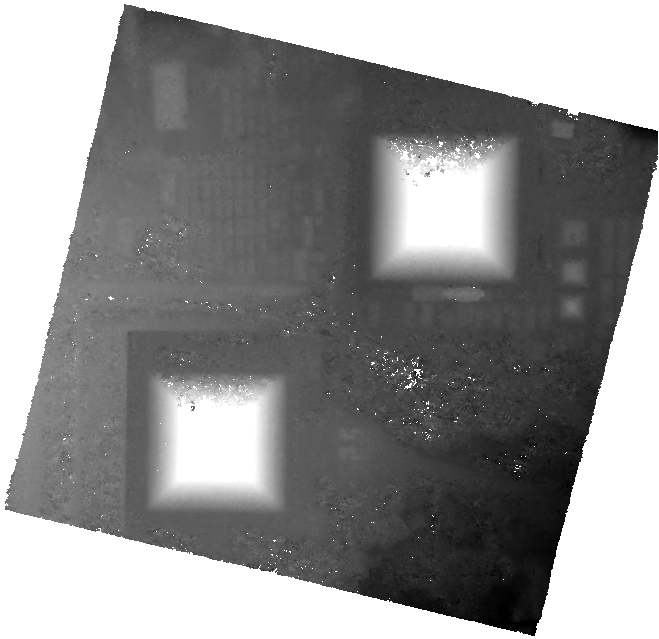

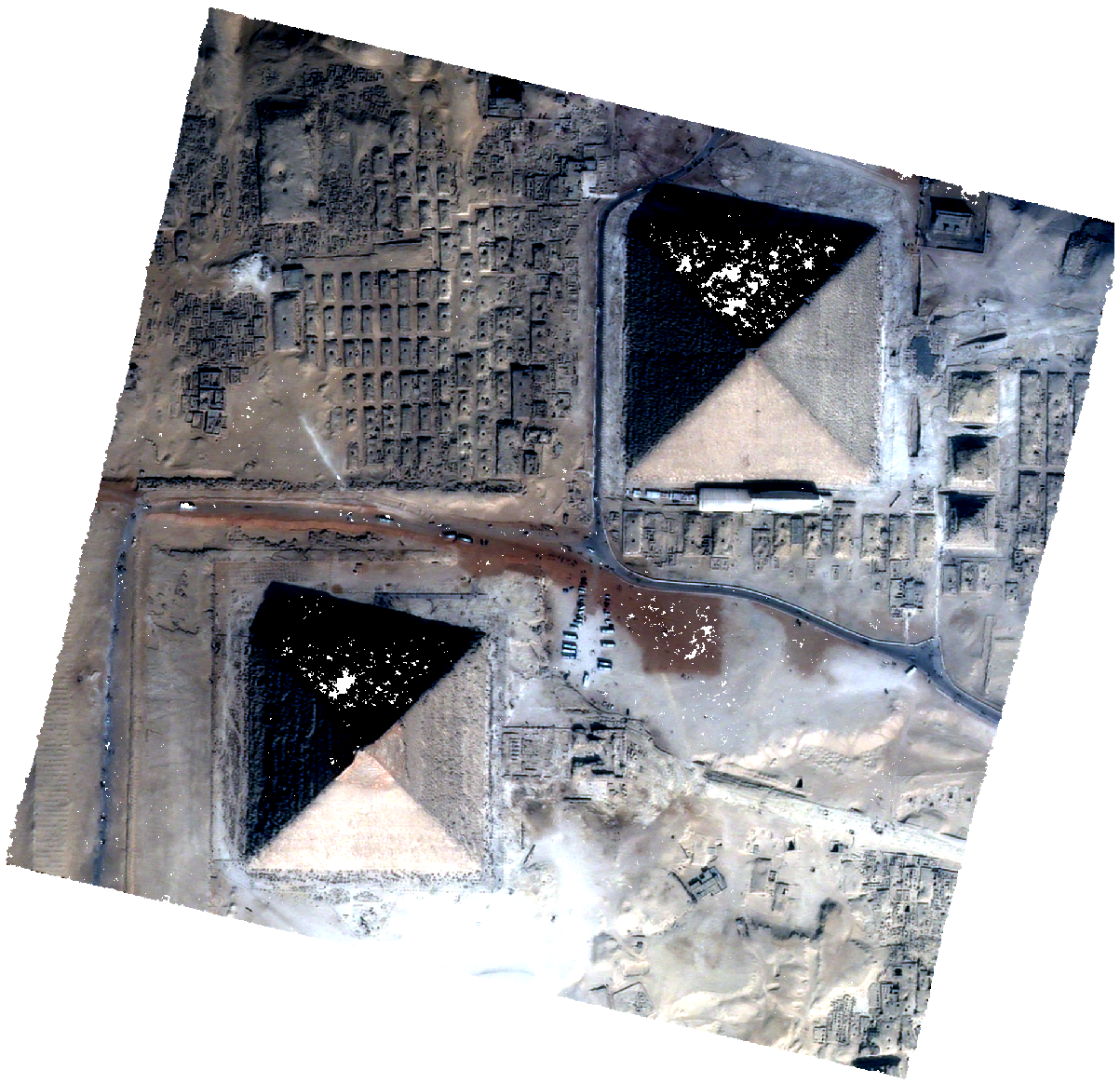

dsm.tif |

clr.tif |

QGIS Mix |

cloudcompare |